New Delhi: Researchers in Germany have developed an artificial intelligence model that can predict how people think and decide, and it’s eerily close to the real deal. Called Centaur, this new model has been trained on over 10 million human decisions and seems to be a major leap in combining psychology with machine learning.

Built by Helmholtz Munich’s Institute for Human-Centered AI, Centaur takes cues from how humans behave across situations, including tasks involving risk, memory, learning, and even moral decisions. The creators believe it could change how we understand mental health and cognitive science.

How Centaur is different from other AI models

Centaur was trained using a dataset called Psych-101, which contains choices from over 160 behavioural experiments and around 60,000 people. That’s a massive set of decisions, covering everything from gambling risk to learning from rewards.

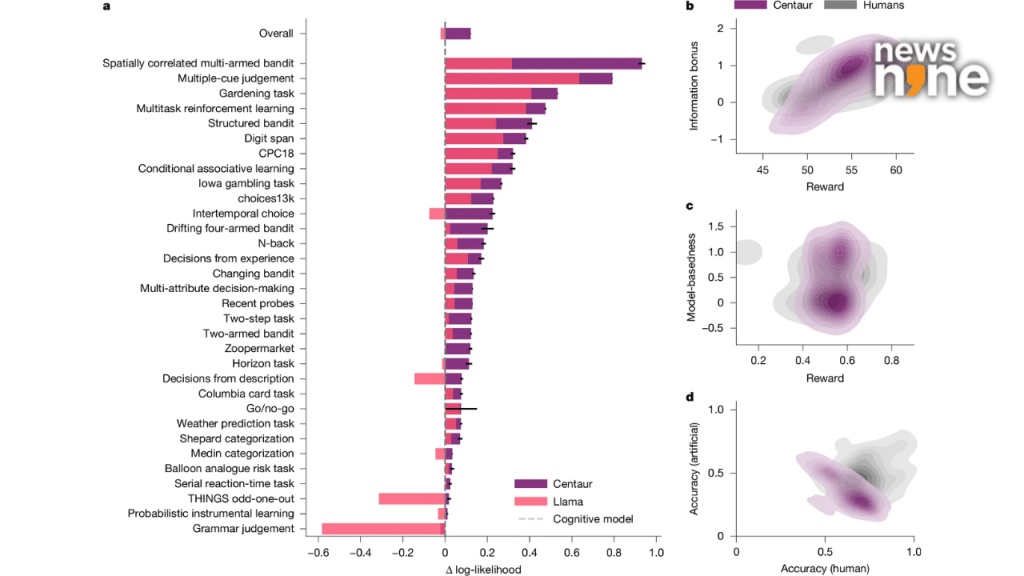

Goodness-of-fit on Psych-101.

Most earlier models struggled with a trade-off; they were either good at explaining decisions or good at predicting them, but rarely both. Centaur does both at once. Dr Marcel Binz and Dr Eric Schulz, who led the project, said it “combines predictive performance with psychological insights”, something that older models couldn’t manage.

The model can also estimate how long someone might take to make a choice and adapt to new, unfamiliar situations. That means Centaur doesn’t just copy past behaviour but can guess what people might do next, even in a different context.

What can it be used for?

The team believes Centaur could be useful in clinical settings, especially for mental health conditions. For example, understanding how people with anxiety or depression make choices might lead to better therapies. It could also be used to revisit old psychological studies with fresh eyes, something that was hard to do earlier without human subjects.

Centaur acts like a virtual lab. You can feed it different tasks or experiments, and it will show how a typical person might react. Dr Binz and Dr Schulz are now planning to add demographic and psychological traits to the model to make predictions more personal and more accurate.

Ethics, transparency and open access

The researchers aren’t keeping this tech locked away. They’ve released it as open-source, meaning anyone can study, test, or run it locally. “We want to make sure this stays transparent and usable,” said the team, adding that it’s a way to keep ethical control and data privacy in check.

They also plan to explore how Centaur’s internal patterns reflect real human thought. The next step is to dig into how the AI organises information and compare it with how healthy individuals , and those with mental health conditions process similar decisions.

Why Psych-101 matters

Psych-101, the data behind Centaur, is a first-of-its-kind dataset. It includes experiments on topics like:

- Reward-based learning

- Memory under stress

- Reaction times

- Risk-taking

- Ethical choices

The data has been cleaned and formatted so that large language models can understand it. This makes it one of the richest resources available to study human cognition through AI.

While Centaur may not feel emotions or have human instincts, it can mirror our decision-making well enough to be useful , and maybe even a little unsettling.

Helmholtz Munich’s open approach, combined with strong psychological grounding, makes this one of the more promising examples of AI being used to understand humans rather than replace them.