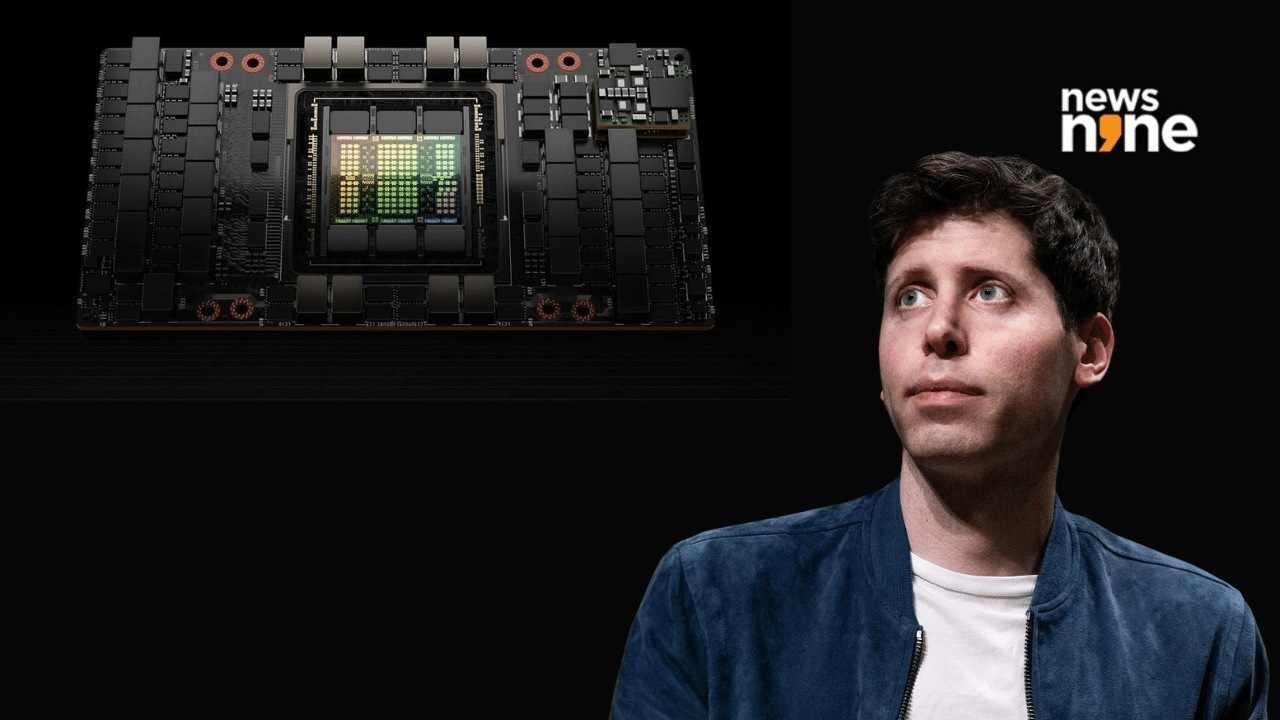

New Delhi: OpenAI CEO Sam Altman has made another bold claim on X, saying the company is on track to bring “well over 1 million GPUs online” by the end of 2025. That’s a huge number for any tech firm, but Altman didn’t stop there. He followed it with a new target, figuring out how to scale that number by 100 times.

This isn’t the first time Altman has raised eyebrows with his ambitious hardware dreams. Earlier this year, he reportedly went looking to raise close to $7 trillion to build out the global AI supply chain. That plan included chip factories, GPU farms, and energy infrastructure. This new update suggests OpenAI is making rapid progress toward that goal.

we will cross well over 1 million GPUs brought online by the end of this year!

very proud of the team but now they better get to work figuring out how to 100x that lol

— Sam Altman (@sama) July 20, 2025

Altman’s GPU plans point to a much bigger problem

The race to build better AI isn’t just about smarter models. It’s also about who has the most computing muscle. That’s where GPUs come in. These powerful processors are the heart of most modern AI models. They’re what make it possible to train large language models like ChatGPT, run image generators, and build agent systems that respond in real time.

Altman’s post wasn’t just a flex. It was a signal to the rest of the AI industry. Getting 1 million GPUs running is a sign of scale, but his comment about figuring out how to scale that 100x tells a different story. That’s not just buying more hardware, it’s a deeper challenge about innovation.

What does “100x” even mean?

The idea of 100x-ing the GPU setup sounds wild at first, but Altman’s not just talking about buying 100 million chips. He’s hinting at a larger problem, how to support the compute demand of AGI, or Artificial General Intelligence.

To get there, OpenAI and others might have to:

- Invent better, more efficient chips

- Build newer architectures

- Solve power and cooling issues at massive scale

- Redesign entire data centers

- Work on energy innovation to support that load

All of this ties back to the idea that the next breakthroughs in AI might not come from the models themselves, but from the infrastructure behind them.

The $7 trillion plan: Still on the table?

Earlier this year, reports suggested Altman was raising money, up to $7 trillion (around ₹609 lakh crore) to build the AI future. The plan included not just GPU access but factories for making chips, energy plants, and global logistics to run it all.

That number sounded impossible to many, but Altman’s latest post shows he’s still chasing that goal. For Altman, the question now seems to be: how do you build the AI supercomputers of the future without breaking the planet or the bank?