New Delhi: After rolling out ChatGPT Health for everyday users, OpenAI has now moved deeper into hospitals and large care systems. The company has announced OpenAI for Healthcare, a new set of AI products aimed squarely at healthcare organisations that operate under strict regulatory pressure.

This launch comes at a time when doctors are stretched thin, paperwork keeps growing, and medical knowledge is scattered across journals, systems, and internal documents. OpenAI is positioning this new offering as infrastructure for hospitals rather than a consumer tool.

Physician use of AI nearly doubled in a year.

Today we launched OpenAI for Healthcare, a HIPAA-ready way for healthcare organizations to deliver more consistent, high-quality care to patients.

Now live at AdventHealth, Baylor Scott & White, UCSF, Cedars-Sinai, HCA, Memorial…

— OpenAI (@OpenAI) January 9, 2026

OpenAI for Healthcare takes shape after ChatGPT Health

OpenAI says OpenAI for Healthcare is designed to help organisations deliver more consistent and high-quality care while supporting HIPAA compliance. The announcement confirms that ChatGPT for Healthcare is available starting now and is already rolling out to several major institutions.

Early adopters include AdventHealth, Baylor Scott and White Health, Boston Children’s Hospital, Cedars-Sinai Medical Center, HCA Healthcare, Memorial Sloan Kettering Cancer Center, Stanford Medicine Children’s Health, and the University of California, San Francisco.

Alongside ChatGPT for Healthcare, OpenAI is also expanding healthcare use of its API. Thousands of organisations already configure the OpenAI API for HIPAA-compliant workflows, including companies such as Abridge, Ambience, and EliseAI.

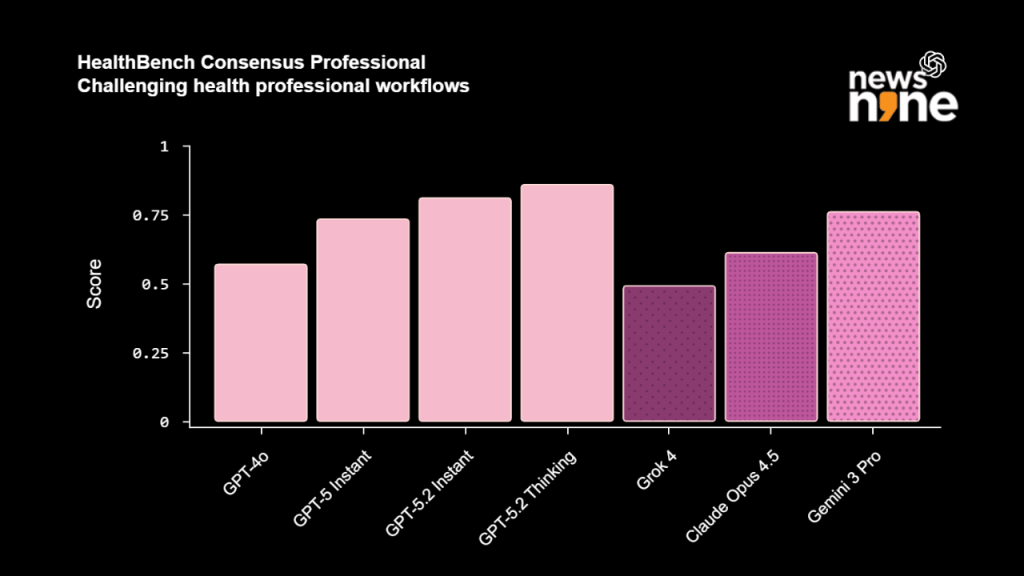

HealthBench Consensus Professional_Challenging health professional workflows | Source: OpenAI

Why hospitals are looking at AI more seriously now

Healthcare systems are facing rising demand and staff burnout. Administrative work eats into time meant for patients. At the same time, AI adoption is moving faster.

OpenAI points to data from the American Medical Association, which shows that physicians’ use of AI nearly doubled in a year. Yet many clinicians still rely on personal tools as hospitals struggle to deploy AI in regulated settings.

OpenAI for Healthcare is meant to close that gap by offering a secure and enterprise-grade setup that hospitals can actually use at scale.

What ChatGPT for Healthcare is built to do

ChatGPT for Healthcare is not meant for casual chat. It is designed to support evidence-based reasoning in real clinical and administrative work while cutting down paperwork.

Key areas it focuses on include:

- Healthcare-specific models powered by GPT-5 and tested by physicians using benchmarks like HealthBench and GDPval

- Evidence retrieval with transparent citations from peer-reviewed studies, public health guidance, and clinical guidelines

- Integration with internal systems such as Microsoft SharePoint so answers reflect approved hospital policies

- Reusable templates for discharge summaries, patient instructions, clinical letters, and prior authorisation tasks

For doctors, this means less time rewriting the same documents and more consistency across teams.

Data control and compliance

OpenAI says patient data and protected health information stay under the organisation’s control. Content shared with ChatGPT for Healthcare is not used to train models.

The platform supports audit logs, data residency options, customer-managed encryption keys, and Business Associate Agreements to support HIPAA-compliant use. Access is managed through role-based controls using tools like SAML single sign-on and SCIM.

The role of the OpenAI API in hospitals

OpenAI for Healthcare also leans heavily on its API platform. Developers can use GPT-5.2 models to build tools for patient chart summarisation, care coordination, and discharge workflows. Companies like Abridge, Ambience, and EliseAI are already building features such as ambient listening, automated clinical notes, and appointment scheduling.

Over the past two years, OpenAI says it has worked with more than 260 licensed physicians across 60 countries to review over 600,000 model outputs. These reviews shaped safety measures, model behaviour, and product updates.