New Delhi: Alibaba’s AI team has released a new version of its large language model, and this one is making waves across the AI community. The updated model is called Qwen3-235B-A22B-Instruct-2507, and it officially dropped on July 22, 2025 (IST). Unlike other models that juggle between different styles of response, this one is focused on something the developers call “non-thinking mode.”

That means the model is designed to give direct answers instead of meandering through chain-of-thought reasoning. In short, it doesn’t talk to itself before replying. And from what the benchmark numbers show, it’s doing that job really well.

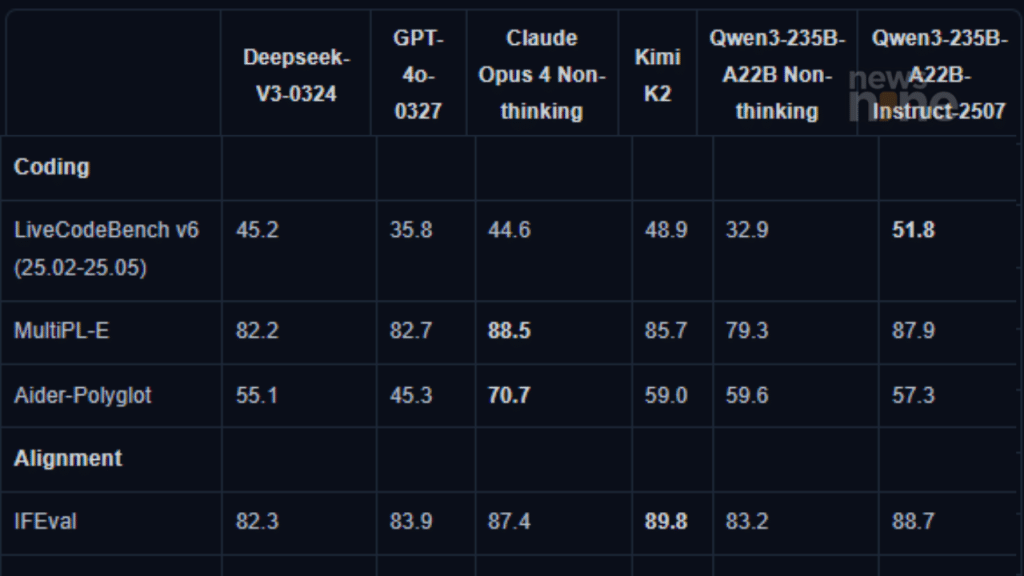

Qwen3-235B-A22B Instruct model benchmarks

Big upgrade, clean answers

The Qwen3-235B-A22B-Instruct-2507 is a Mixture-of-Experts model with 235 billion parameters, but only 22 billion are activated during a single response. This approach helps in balancing performance and efficiency. It supports a massive 256K context length, allowing it to handle long conversations and documents without breaking a sweat.

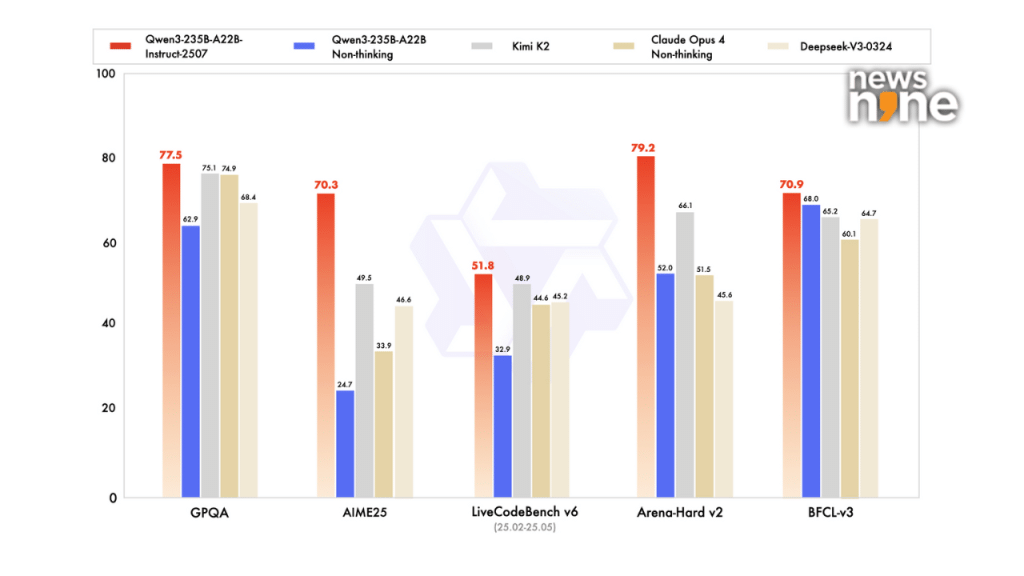

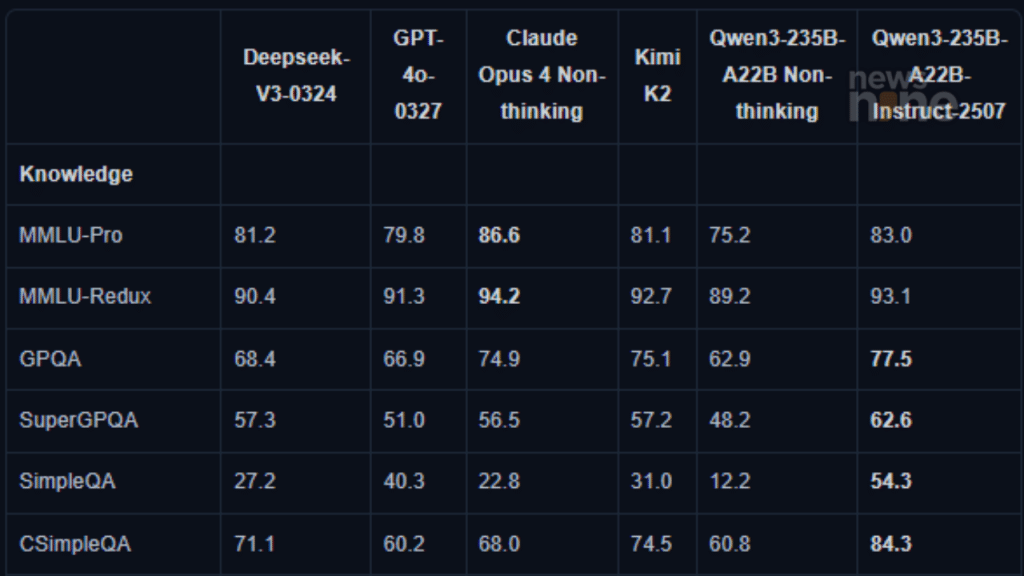

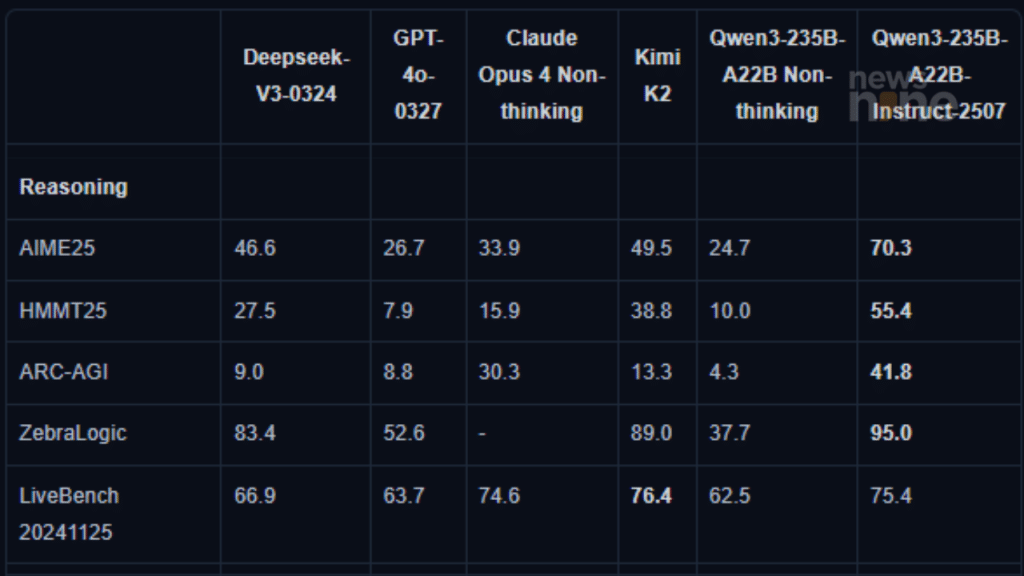

According to Alibaba’s official model card, this new version delivers big improvements in instruction-following, reasoning, coding, science, and math. In benchmark tests like AIME25, it scored 70.3, way ahead of GPT-4o’s 26.7 and Claude Opus’s 33.9. Even on tough logic tests like ZebraLogic and ARC-AGI, it beat nearly every model in sight.

Qwen3-235B-A22B Instruct model benchmarks

It’s also strong in multilingual capabilities. For example, on the CSimpleQA test, it scored 84.3, ahead of Deepseek-V3’s 71.1 and GPT-4o’s 60.2. These kinds of gains matter in places like India, where AI tools need to work well across different languages.

New training style: fast vs slow thinking

The biggest change isn’t just in performance. It’s in how the model was trained. The team behind Qwen has moved away from a mixed model that handled both direct answers and deep reasoning. Instead, they’ve split the training into two tracks. The Instruct-2507 model focuses only on fast, clean responses. Another model focused on complex thinking is expected later.

Qwen3-235B-A22B Instruct model benchmarks

“Fast thinking” handles things like task execution, text understanding, and quick answers. “Slow thinking” is expected to handle longer reasoning tasks. By separating them, the developers say each model can do its specific job better.

Why agent performance matters

Another area where this model stands out is in agent-like behavior. In the BFCL-v3 test, which checks how well a model can plan, understand instructions, and call external tools, it scored 70.9. That puts it ahead of GPT-4o and Kimi-K2. This means the model could power more advanced AI assistants that do more than just chat.

Qwen3-235B-A22B Instruct model benchmarks

Even human-aligned preferences have improved. In the Arena-Hard v2 benchmark, which is judged by GPT-4 itself, Qwen3-Instruct scored 79.2. That’s higher than Claude Opus and Deepseek.

How people are reacting

On Reddit, the open-source community seems happy. One user on r/LocalLLaMA wrote that the model was a great step up, especially for those who don’t like chain-of-thought output. Some mentioned that it’s heavy to run locally, but quantized versions are already helping.

On X (formerly Twitter), the release was called a direct hit to Kimi-K2’s recent popularity. People are calling this the new king of open-source models. It even outperforms some closed-source ones, which is not something you see every day.

What’s next?

Alibaba’s Tongyi Qianwen team has hinted that the other half of their plan, a thinking-focused model, is still on the way. For now, though, the Instruct-2507 model sets a strong pace for where open-source AI is heading. It’s available for free on platforms like Hugging Face and ModelScope.