Mistral AI unveiled its new Mistral 3 model suite, delivering major performance gains on Nvidia’s latest hardware.

- Mistral AI launched its new Mistral 3 model suite optimized for Nvidia hardware.

- Nvidia reiterated long-term GPU infrastructure growth at a UBS tech conference.

- Traders on Stocktwits highlighted strong momentum for the day.

Shares of Nvidia Corp. (NVDA) rose 0.8% on Tuesday after Mistral AI launched its new Mistral 3 family of open-source, multilingual and multimodal models, which is a suite built and optimized across Nvidia’s supercomputing and edge platforms, including the company’s newest data-center hardware.

The flagship Mistral Large 3, a mixture-of-experts (MoE) system, activates only select parts of the network during inference, delivering significant gains in efficiency and scalability. With 41 billion active parameters, 675 billion total parameters, and a 256,000 context window, the model is designed for enterprise AI workloads.

Mistral AI Unlocks 10x Gains On Nvidia’s Blackwell Platform

By combining the Mistral Large 3 architecture with Nvidia GB200 NVL72 systems, the company said it achieved 10x performance improvements versus the previous-generation Nvidia H200, marking a leap that reduces compute cost per token and improves energy efficiency for large-scale training and inference.

The model’s granular MoE routing taps into Nvidia’s NVLink coherent memory and parallelism features, alongside NVFP4, the company’s accuracy-preserving low-precision format. Additional optimizations include Nvidia Dynamo, TensorRT-LLM, SGLang and vLLM, which are all being tuned for the Mistral 3 family.

Mistral AI also released nine small “Ministral 3” models optimized for NVIDIA’s edge platforms, including Spark, RTX PCs and laptops, and Jetson devices, with support through frameworks such as Llama.cpp and Ollama. The entire Mistral 3 family is openly available and will also be deployable through Nvidia NIM microservices soon.

Nvidia CFO Pushes Back Against AI Bubble Fears

At the UBS Global Technology & AI Conference, Nvidia EVP and CFO Colette Kress reinforced the company’s long-term positioning on Tuesday, arguing that what some call an AI bubble is actually a structural transition from CPU-based computing to GPU-accelerated infrastructure.

“Most of all work done in the data center has been done with CPUs for years,” Kress said. She projected that global GPU-powered data-center infrastructure could reach $3 trillion to $4 trillion by the end of the decade, roughly doubling the existing base. Kress also highlighted Nvidia’s next-generation platforms, including Grace Blackwell and the soon-to-launch Vera Rubin systems, while expressing confidence in the company’s position amid rising competition.

Stocktwits Users Highlight Nvidia’s Strong Momentum Setup

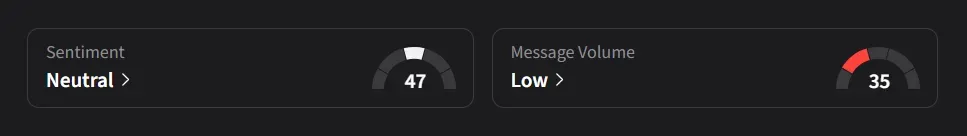

On Stocktwits, retail sentiment for Nvidia was ‘neutral’ amid ‘low’ message volume.

Traders said the stock is “acting like pure momentum fuel today,” and added that the move looked strong for the day.

Nvidia’s stock has risen 35% so far in 2025.

For updates and corrections, email newsroom[at]stocktwits[dot]com.<